Ever wondered how life looked for people of the old age? What colour clothes they wore? How old films would look if they were coloured? Or how Delhi looked in 1911?

Black and white photography bears its own emotional impact — you get rid of populist distractions and kitschy stereotypes of colour. The skies are blue and the roses are red. That's obvious. But what lies within? The lights, the shadows, the contrasts — a rich world. That's what black & white photography expresses directly. But colours speak louder than words. Colours create, enhance, change, establish, and reveal the mood of a person, a painting, and nature itself. We can't imagine life without them.

Before my mood turns pensive and I completely deviate from the topic — let's deep dive into the theory. We'll be colouring old black and white images and videos. Adding life to it!

We'll start with a very interesting and foundational concept in Deep Learning: Autoencoders.

Autoencoders

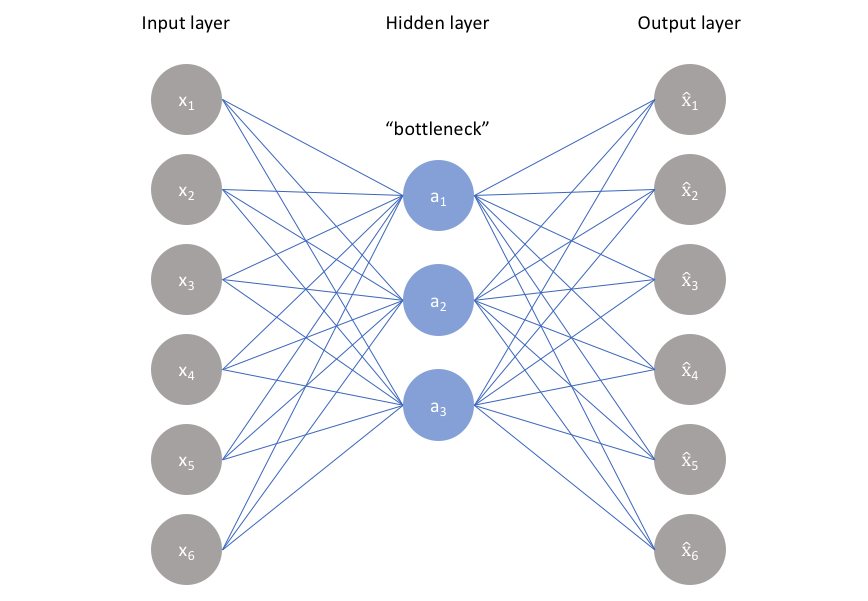

Autoencoders are an unsupervised learning technique in which we leverage neural networks for the task of representation learning. We design a neural network architecture with a bottleneck — a compressed knowledge representation of the original input. If the input features were independent of one another, compression and reconstruction would be very difficult. However, if some sort of structure exists in the data (i.e. correlations between input features), this structure can be learned and leveraged when forcing the input through the bottleneck.

As visualized above, we take an unlabeled dataset and frame it as a supervised learning problem — tasked with outputting x̂, a reconstruction of the original input x. The network is trained by minimizing the reconstruction error L(x, x̂), which measures the differences between the original input and its reconstruction. The bottleneck is key — without it, the network could simply memorize the input values by passing them through unchanged.

We'll discuss Autoencoders and their variants in more depth in upcoming posts. For now, this intuition is enough to understand colourization.

Colourization

Given our intuition about Autoencoders — what if we feed a pool of coloured images as input, force the encoder to produce a black and white representation as its compressed output, and then train the decoder to reconstruct the original coloured image from that encoding?

In this way, the model learns to extract the important structural features as the encoding, then reconstructs colour from them. This trained model can then be used to colourise any black and white image or video — which is exactly our target.

Let's Code

Since this requires high hardware requirements, we'll use Google Colab for compute and a pretrained model by fast.ai for quick and precise results. Try to use shorter videos with clear pixels and set the render factor to 21.